Secure Handling of PCI and PII Data during Data Ingestion to Data Lakes

As enterprises increasingly migrate their analytical

workload and storage to cloud data lakes (AWS S3, Google Cloud Storage, Azure

ADLS), managing sensitive data such as Payment Card Information (PCI), PHI

and Personally Identifiable Information (PII) becomes crucial. Apache Kafka

Streams, a lightweight and distributed stream processing library, offers

robust capabilities to process data in motion. This article discusses how Kafka

Streams can be exploited to handle PCI and PII data securely during data

ingestion into data lakes. We explore design patterns, best practices, and

regulatory compliance mechanisms, focusing on data encryption, masking, and

governance.

The challenge in Ingesting Sensitive Data to Data Lake

Data lakes serve as centralized repositories for storing

vast amounts of structured, semi-structured, and unstructured data. While they

offer immense flexibility for analytics and storage, the ingestion of sensitive

data such as PCI and PII requires stringent security and compliance measures.

Apache Kafka Streams provides a powerful tool for stream processing, enabling

real-time transformation, enrichment, and secure handling of sensitive data

during ingestion.

Complexities in Handling PCI and PII Data

- Regulatory

Compliance: PCI data is governed by the Payment Card Industry Data

Security Standard (PCI DSS). PII falls under various regulatory

frameworks such as GDPR, CCPA, and HIPAA.

- Security

Risks: Data breaches during transmission or storage. Unauthorized

access to sensitive information.

- Data

Transformation: Ensuring sensitive data is masked, tokenized, or

encrypted before landing in the data lake.

- Scalability:

Efficiently processing high-velocity streams without compromising

security.

Kafka Streams Overview

Kafka Streams is a lightweight Java library for building

real-time, distributed stream processing applications directly on Apache Kafka.

Builds on the Apache Kafka® producer and consumer APIs, and leverages the

native capabilities of Kafka to offer data parallelism, distributed

coordination, fault tolerance, and operational simplicity. Hence it processes

and transforms data streams from Kafka topics with scalability, fault

tolerance, and exactly-once semantics. It includes a high-level DSL, support

for stateful and stateless operations, embedded state stores, and integration

with Kafka. The typical use cases for Kafka Streams are real-time analytics,

event-driven applications, data transformation, and monitoring. Unlike

other frameworks like Spark streaming and Flink, it requires no separate

cluster, running as part of the application itself.

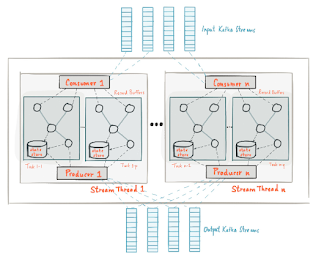

Kafka Stream Architecture

Securing PCI and PII Data with Kafka Streams

To secure PCI and PII data using Kafka Streams, sensitive

fields can be encrypted, masked, tokenized, or removed using stream processing

in a stream processor (node) after reading these from a source Kafka

topic using a source processor before writing to target topics using a sink

processor.

1. Data Encryption

Encrypt sensitive data fields using cryptographic

libraries such as BouncyCastle or Java Cryptography Extension (JCE) within Kafka

Streams processors. This encryption can be applied at the field level to

isolate and protect PCI and PII fields, using mapValues() or process() methods.

Encryption keys are stored securely using key management systems (e.g., AWS

KMS, HashiCorp Vault).

2. Data Masking and Tokenization

Data masking and tokenization secure PCI and PII data in

Kafka Streams by transforming sensitive information into protected forms. Data

masking obscures sensitive data (e.g., replacing parts of credit card

numbers with asterisks) to prevent unauthorized access. Tokenization replaces

sensitive fields with unique tokens, storing the original data securely in a

token vault. These techniques can be implemented during stream processing to

ensure only masked or tokenized data is written to Kafka topics. This minimizes

the risk of exposing sensitive information while maintaining data usability for

downstream applications.

Kafka Stream Interceptor Architecture to handle PII / PCI

Conclusion

Apache Kafka Streams provides a versatile and secure

framework for handling PCI and PII data during ingestion into data lakes. By

leveraging encryption, masking, tokenization, and removal, organizations can

achieve regulatory compliance and safeguard sensitive information. Implementing

these best practices within Kafka Streams workflows ensures secure, scalable,

and efficient data processing, making it an essential component of modern data

pipelines.

No comments:

Post a Comment