In this blog post we will discusses how database developers and data analyst can connect to a remote HIVE warehouse to perform querying and analysis of data in HDFS.

Step 1: Install Java 21 in data analyst system (different user account)

Command:

sudo apt update

sudo apt install openjdk-21-jdk

Step 2: Find the Java Installation Path

Command:

update-alternatives --list java

Step 3: Set Java 21 for Only One User

Command:

nano .bashrc

Add the below environment variables

#JAVA Related Options

export JAVA_HOME=/usr/lib/jvm/java-21-openjdk-amd64/bin/java

export PATH=$PATH:$JAVA_HOME/bin

Refresh the profile

source ~/.bashrc

Step 4: Verify Java version

Command

java -version

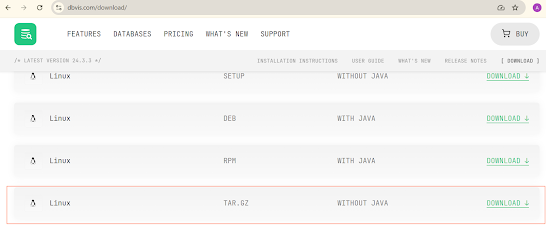

Step 5: Download the DbVisualizer

Command

Go to https://www.dbvis.com/download/ and copy the link of the latest linux distribution.

wget https://www.dbvis.com/product_download/dbvis-24.3.3/media/dbvis_linux_24_3_3.tar.gz

Extract the binaries:

tar xvfz dbvis_linux_24_3_3.tar.gz

Step 6: Configure the class path

Command:

nano .bashrc

Add the following lines to the end of .bashrc file

#DbVisualizer

export INSTALL4J_JAVA_HOME=/usr/lib/jvm/java-21-openjdk-amd64/bin/java

export DB_VIS=/home/aksahoo/applications/DbVisualizer

export PATH=$PATH:$DB_VIS/

Refresh the profile:

source ~/.bashrc

Step 7: Start the DbVisualizer

Command:

dbvis

Step 8: Create a connection to Remote HIVE Warehouse

Provide the Hive Server 2 details.

Database Server: localhost

Database Port: 10000

Database: default

Database Userid: hdoop

database Password:

Step 9: Allow User Impersonation in Hadoop Core Site (Hadoop user account)

Edit core-stite.xml: nano hadoop/etc/hadoop/core-site.xml

Add the following configurations

<property>

<name>hadoop.proxyuser.hdoop.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hdoop.groups</name>

<value>*</value>

</property>

Step 10: Allow Impersonation in Hive Configuration (Hadoop user account)

Edit hive-site.xml: nano hive/conf/hive-site.xml

Add the following configurations

<property>

<name>hive.server2.enable.doAs</name>

<value>true</value>

</property>

Step 11: Restart Hadoop and ensure Hadoop Services are up and running (Hadoop user account) in this case hdoop

Command:

cd /home/hdoop/hadoop/sbin

./stop-all.sh

./start-all.sh

jps

Step 12: Refresh user to group Mapping

Command:

hdfs dfsadmin -refreshUserToGroupsMappings

Step 13: Start the HIVE Metastore Service and HiveServer2

Command:

hive --service metastore &

hive --service hiveserver2 &

Step 14: Go to DbVisualizer and test connection

Step 15: Quey HIVE tables from DbVisualizer

HQL: select * from employees;

No comments:

Post a Comment