Apache Spark is an important framework for big data processing, offering high performance, scalability, and versatility for different computational tasks through parallel and in-memory processing mechanisms. This article explores the various options available for deploying Spark jobs, on the AWS cloud such as EMR, Glue, EKS, and ECS. Additionally, it proposes a centralized architecture for orchestration, monitoring, logging, and alerting, enabling enhanced observability and operational reliability for Spark workflows.

Overview of Apache Spark

Apache Spark is an open-source distributed computing system that excels in big data processing (volume, veracity, and velocity) through its in-memory computation capabilities and fault-tolerant architecture. It supports various applications, including batch processing, real-time analytics, machine learning, and graph computations, making it a critical tool for data engineers and researchers.

Core Strengths of Apache Spark

The following characteristics underscore Spark’s prominence in the big data ecosystem:

Speed: In-memory computation accelerates processing, achieving speeds up to 100 times faster than traditional frameworks like Hadoop MapReduce, which makes extensive use of traditional disk-based reads and writes to interim storage during processing.

Ease of Use: APIs in Python, Scala, Java, and R make it accessible to developers across disciplines.

Workload Versatility: Spark accommodates diverse tasks, including batch processing, stream processing, ad-hoc SQL queries, machine learning, and graph processing.

Scalability: Spark scales horizontally to process petabytes of data across distributed clusters.

Fault Tolerance: Resilient distributed datasets (RDDs) ensure data recovery in case of system failures.

Key Spark Modules

Spark’s modular design supports a range of functionalities:

Spark Core: Handles task scheduling, memory management, and fault recovery.

Spark SQL: Facilitates structured data processing through SQL.

Spark Streaming: Enables real-time analytics.

MLlib: Offers a scalable library for machine learning tasks.

GraphX: Provides tools for graph analytics.

Deployment Modes for Apache Spark

Apache Spark supports multiple deployment modes to suit different operational needs:

Standalone Mode: Built-in cluster management for small to medium-sized clusters.

YARN Mode: Integrates with Hadoop’s resource manager, YARN.

Kubernetes Mode: Leverages Kubernetes for containerized environments.

Mesos Mode: Suitable for organizations using Apache Mesos.

Local Mode: Ideal for development and testing on a single machine.

Leveraging AWS for Spark Job Execution

AWS offers a suite of services to simplify Spark deployment, each tailored to specific use cases. These include fully managed platforms, Serverless options, and containerized solutions. This section reviews the key AWS services for running Spark jobs and their observability features.

Amazon EMR (Elastic MapReduce)

Amazon EMR provides both a managed Hadoop ecosystem optimized for Spark jobs as well as a serverless option. Managed Amazon EMR offers fine-grained control over cluster configurations, scaling, and resource allocation, making it ideal for customized, performance-intensive Spark jobs. In contrast, serverless Amazon EMR eliminates infrastructure management entirely, providing a simplified, cost-efficient option for on-demand and dynamically scaled workloads.

Key Features:

- Dynamic cluster scaling for efficient resource utilization.

- Seamless integration with AWS services such as S3, DynamoDB, and Redshift.

- Cost efficiency through spot instances and savings plans.

Observability Tools:

- Monitoring: Amazon CloudWatch can track detailed Spark metrics along with default basic metrics.

- Logging: EMR logs can be stored in Amazon S3 for long-term analysis.

- Activity Tracking: AWS CloudTrail provides audit trails for cluster activities.

AWS Glue

AWS Glue is a serverless data integration service that supports Spark-based ETL (Extract, Transform, Load) workflows.

Key Features:

- Managed infrastructure eliminates administrative overhead.

- Built-in data catalog simplifies schema discovery.

- Automatic script generation accelerates ETL development.

Observability Tools:

- Metrics: CloudWatch captures Glue job execution metrics.

- State Tracking: Glue job bookmarks monitor the processing state.

- Audit Logging: Detailed activity logs via AWS CloudTrail.

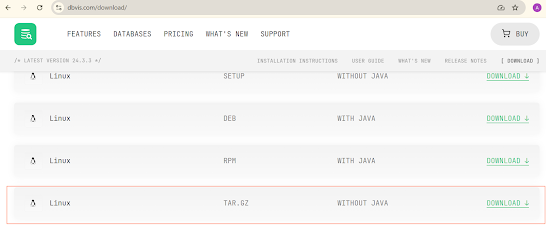

AWS Databricks

AWS Databricks is a fully managed platform that integrates Apache Spark with a collaborative environment for data engineering, machine learning, and analytics. It streamlines Spark job deployment through optimized clusters, automated workflows, and native integration with AWS services, making it ideal for large-scale and collaborative Big Data applications.

Key Features of AWS Databricks for Spark Jobs

- Optimized Performance: Databricks Runtime enhances Spark with proprietary performance optimizations for faster execution.

- Collaborative Environment: Supports shared notebooks for seamless collaboration across teams.

- Managed Clusters: Simplifies cluster creation, scaling, and lifecycle management.

- Auto-Scaling: Dynamically adjusts resources based on job requirements.

- Integration with AWS Ecosystem: Native integration with S3, Redshift, Glue, and other AWS services.

- Support for Multiple Workloads: Enables batch processing, real-time streaming, machine learning, and data science.

Observability Tools for Spark Jobs on AWS Databricks

- Workspace Monitoring: Built-in dashboards for cluster utilization, job status, and resource metrics.

- Logging: Centralized logging of Spark events and application-level logs to Databricks workspace or S3.

- Alerting: Configurable alerts for job failures or resource issues via Databricks Job Alerts.

- Integration with Third-Party Tools: Supports Prometheus and Grafana for custom metric visualization.

- Audit Trails: Tracks workspace activities and changes using Databricks' event logging system.

- CloudWatch Integration: Enables tracking of Databricks job metrics and logs in AWS CloudWatch for unified monitoring.

Amazon EKS (Elastic Kubernetes Service)

EKS allows Spark jobs to run within containerized environments orchestrated by Kubernetes.

AWS now provides a fully managed service with Amazon EMR on Amazon EKS.

Key Features:

- High portability for containerized Spark workloads.

- Integration with tools like Helm for deployment automation.

- Fine-grained resource control using Kubernetes namespaces.

Observability Tools:

- Monitoring: CloudWatch Container Insights offers detailed metrics.

- Visualization: Prometheus and Grafana enable advanced metric analysis.

- Tracing: AWS X-Ray supports distributed tracing for Spark workflows.

Amazon ECS (Elastic Container Service)

Amazon ECS supports running Spark jobs in Docker containers, offering flexibility in workload management.

Key Features:

- Simplified container orchestration with AWS Fargate support.

- Compatibility with custom container images.

- Integration with existing CI/CD pipelines.

Observability Tools:

- Metrics: CloudWatch tracks ECS task performance.

- Logs: Centralized container logs in Amazon Logs Insights.

- Tracing: AWS X-Ray provides distributed tracing for containerized workflows.

Centralized Architecture for Observability

A unified architecture for managing Spark workflows across AWS services enhances scalability, monitoring, and troubleshooting. Below is a proposed framework.

Orchestration: AWS Step Functions coordinate workflows across EMR, Glue, EKS, and ECS.

Logging: Centralized log storage in S3 or CloudWatch Logs ensures searchability and compliance.

Monitoring: CloudWatch dashboards provide consolidated metrics. Kubernetes-specific insights are enabled using Prometheus and Grafana. Alarms notify users of threshold violations.

Alerting: Real-time notifications via Amazon SNS, with support for email, SMS, and Lambda-triggered automated responses.

Audit Trails: CloudTrail captures API-level activity, while tools like Athena enable historical log analysis.

Conclusion

The ability to deploy Apache Spark jobs across various AWS services empowers organizations with the flexibility to choose optimal solutions for specific use cases. By implementing a centralized architecture for orchestration, logging, monitoring, and alerting, organizations can achieve seamless management, observability, and operational efficiency. This approach not only enhances Spark’s inherent scalability and performance but also ensures resilience in large-scale data workflows.