Steps for Java 8 installations

..............................

Step 1: sudo apt update

Step 2: sudo apt install openjdk-8-jdk -y

Step 3: java -version; javac -version (verification of java installations)

Steps for Hadoop 3.3.0 Installations

......................................

Step 1: (Install OpenSSH server and client): sudo apt install openssh-server openssh-client -y

Step 2: Create an admin user for hadoop ecosystem: sudo adduser hdoop

Step 3: su - hdoop (always repeat this step when starting hadoop)

Step 4: (Enable Passwordless SSH for Hadoop User) ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa

Step 5: (Store the public key as authorized_keys in the ssh directory) cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

Step 6: (Set the file permissions) chmod 0600 ~/.ssh/authorized_keys

Step 7: ssh the new user to local host: ssh localhost (always repeat this step when starting hadoop)

Step 8: (download the tar): wget https://archive.apache.org/dist/hadoop/common/hadoop-3.3.0/hadoop-3.3.0.tar.gz

Step 9: (untar): tar xzf hadoop-3.3.0.tar.gz

Step 10: (create symlink): ln -s /home/hdoop/hadoop-3.3.0/ /home/hdoop/hadoop

Step 11: (Set the following environment variables): nano .bashrc

#JAVA Related Options

export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64

#Hadoop Related Options

export HADOOP_HOME=/home/hdoop/hadoop

export HADOOP_INSTALL=$HADOOP_HOME

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=$HADOOP_HOME

export YARN_HOME=$HADOOP_HOME

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export PATH=$PATH:$HADOOP_HOME/sbin:$HADOOP_HOME/bin

export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib/native"

export HADOOP_CLASSPATH=${JAVA_HOME}/lib/tools.jar

#HIVE Related Options

export HIVE_HOME=/home/hdoop/hive

export PATH=$PATH:$HIVE_HOME/bin

Step 12: (Source the .bashrcsource): ~/.bashrc

Step 13: (configure haddop environment): nano $HADOOP_HOME/etc/hadoop/hadoop-env.sh (uncomment JAVA_HOME and configure as follows)

export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64

Step 14: (Configure hadoop core): nano $HADOOP_HOME/etc/hadoop/core-site.xml (add the below properties)

<property>

<name>hadoop.tmp.dir</name>

<value>/home/hdoop/tmpdata</value>

</property>

<property>

<name>fs.default.name</name>

<value>hdfs://127.0.0.1:9000</value>

</property>

Step 15: (Create the /home/hdoop/tmpdata folder): mkdir /home/hdoop/tmpdata

Step 16: (edit hdfs site file): nano $HADOOP_HOME/etc/hadoop/hdfs-site.xml (add the below entries)

<property>

<name>dfs.data.dir</name>

<value>/home/hdoop/dfsdata/namenode</value>

</property>

<property>

<name>dfs.data.dir</name>

<value>/home/hdoop/dfsdata/datanode</value>

</property>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

Step 17: (Configure mapred site): nano $HADOOP_HOME/etc/hadoop/mapred-site.xml (add the below property)

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

Step 18: (Configure yarn): nano $HADOOP_HOME/etc/hadoop/yarn-site.xml (add the below properties)

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>127.0.0.1</value>

</property>

<property>

<name>yarn.acl.enable</name>

<value>0</value>

</property>

<property>

<name>yarn.nodemanager.env-whitelist</name>

<value>JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PERPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_MAPRED_HOME</value>

</property>

Step 19: (Format Name Node): hdfs namenode -format

Step 20: Play around with hdfs dfs command (File already provided)

Steps for running WordCount MapReduce Programs

.................................................

Step 1: create following path to store your mapreduce application (optional): mkdir /home/hdoop/application/mapreduce_app/wordcount_app/

Step 2: create the mapreduce java application file: nano WordCount.java

import java.io.IOException;

import java.util.StringTokenizer;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class WordCount {

public static class TokenizerMapper

extends Mapper<Object, Text, Text, IntWritable>{

private final static IntWritable one = new IntWritable(1);

private Text word = new Text();

public void map(Object key, Text value, Context context

) throws IOException, InterruptedException {

StringTokenizer itr = new StringTokenizer(value.toString());

while (itr.hasMoreTokens()) {

word.set(itr.nextToken());

context.write(word, one);

}

}

}

public static class IntSumReducer

extends Reducer<Text,IntWritable,Text,IntWritable> {

private IntWritable result = new IntWritable();

public void reduce(Text key, Iterable<IntWritable> values,

Context context

) throws IOException, InterruptedException {

int sum = 0;

for (IntWritable val : values) {

sum += val.get();

}

result.set(sum);

context.write(key, result);

}

}

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

Job job = Job.getInstance(conf, "word count");

job.setJarByClass(WordCount.class);

job.setMapperClass(TokenizerMapper.class);

job.setCombinerClass(IntSumReducer.class);

job.setReducerClass(IntSumReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

FileInputFormat.addInputPath(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

Step 3: compile the java file: hadoop com.sun.tools.javac.Main WordCount.java

Step 4: create the jar file: jar cf wc.jar WordCount*.class

Step 5: submit the mapreduce program to the cluster: hadoop jar wc.jar WordCount /input/test/file1.txt /output/test/

(create the input hdfs path and load the file1.txt having some text to the hdfs path using hdfs dfs -put command)

(don't create the output path)

....................Hive 3.1.2 Installation Steps .......

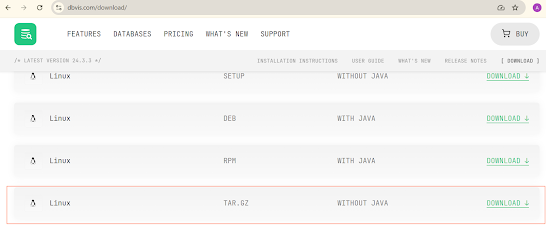

Step 1: Download the tar at /home/hdoop/: wget https://apache.root.lu/hive/hive-3.1.2/apache-hive-3.1.2-bin.tar.gz

Step 2: Untar the tar file: tar xzf apache-hive-3.1.2-bin.tar.gz

Step 3: Create a symlink: ln -s /home/hdoop/apache-hive-3.1.2-bin /home/hdoop/hive

Step 4: edit the .bashrc to add the following environment variables:

export HIVE_HOME=/home/hdoop/hive

export PATH=$PATH:$HIVE_HOME/bin/

Step 5: nano $HADOOP_HOME/etc/hadoop/mapred-site.xml: Add the following Configuration

<property>

<name>mapreduce.map.memory.mb</name>

<value>4096</value>

</property>

<property>

<name>mapreduce.reduce.memory.mb</name>

<value>4096</value>

</property>

Step 6: Resrtart Hadoop

cd /home/hdoop/hadoop/sbin

./stop-all.sh

./start-all.sh

Step 7: Create temp and warehouse folder in HDFS and assign permissions

hdfs dfs -mkdir -p /user/hive/warehouse

hdfs dfs -mkdir -p /tmp/hive

hdfs dfs -chmod 777 /tmp

hdfs dfs -chmod 777 /tmp/hive

hdfs dfs -chmod 777 /user/hive/warehouse

Step 8: Delete Obsolete log4j-slf4j

ls /home/hdoop/hive/lib/log4j-slf4j-impl-*.jar

rm /home/hdoop/hive/lib/log4j-slf4j-impl-2.10.0.jar

Step 9: Replace Guava.Jar

ls /home/hdoop/hadoop/share/hadoop/common/lib/guava*.jar

ls /home/hdoop/hive/lib/guava*.jar

cp /home/hdoop/hadoop/share/hadoop/common/lib/guava*.jar /home/hdoop/hive/lib/

rm /home/hdoop/hive/lib/guava-19.0.jar

Step 10: Intialize Derby Database

/home/hdoop/hive/bin/schematool -initSchema -dbType derby

a metastore_db should be created upon successfull execution

Step 10: Configure yarn-site.xml: nano $HADOOP_HOME/etc/hadoop/yarn-site.xml

(Add the below properties at the end)

<property>

<name>yarn.nodemanager.vmem-check-enabled</name>

<value>false</value>

</property>

<property>

<name>yarn.nodemanager.vmem-pmem-ratio</name>

<value>4</value>

</property>

Step 11: Start hive: hive

Create some tables and check if HQL is able to trigger map-reduce jobs

...................MySQL as meta store for (Hive 3.1.2).......

Step 1: Install MySQL

sudo apt update

sudo apt install mysql-server

Step 2: Ensure MySQL erver is running: sudo systemctl start mysql.service

Step 3: Create user credentials for hive

sudo mysql

CREATE USER 'hiveuser'@'localhost' IDENTIFIED BY 'Hive111!!!';

GRANT ALL PRIVILEGES ON *.* TO 'hiveuser'@'localhost';

Step 4: Download mysql-connector-java-8.0.28.jar to /home/hdoop/hive/lib/

cd /home/hdoop/hive/lib/

wget https://repo1.maven.org/maven2/mysql/mysql-connector-java/8.0.28/mysql-connector-java-8.0.28.jar

Step 5: create hive-site.xml file in /home/hdoop/hive/conf/

nano /home/hdoop/hive/conf/hive-site.xml

(add the below configuratuons)

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://localhost/metastore_db?createDatabaseIfNotExist=true</value>

<description>

JDBC connect string for a JDBC metastore.

To use SSL to encrypt/authenticate the connection, provide database-specific SSL flag in the connection URL.

For example, jdbc:postgresql://myhost/db?ssl=true for postgres database.

</description>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.cj.jdbc.Driver</value>

<description>Driver class name for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>Hive111!!!</value>

<description>password to use against metastore database</description>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>hiveuser</value>

<description>Username to use against metastore database</description>

</property>

</configuration>

Step 6: Hive Schema Initial Tool

/home/hdoop/hive/bin/schematool -initSchema -dbType mysql

login to MySQL to check if metastore_db database is created or not

Step 7: Restart hadoop and hive and check if HQL are running